Seven Steps To Reduce AI Bias

Bias. Everyone has it. Every piece of software is susceptible to it. Every citizen wants less of it. Here’s how to reduce it in your data science projects.

www.techno-sapien.cm

“Bias doesn’t come from AI algorithms, it comes from humans,” explains Cassie Kozyrkov in her Towards Data Science article, What is Bias? So if we’re the source of AI bias and risk, how do we reduce it?

It’s not easy. Cognitive science research shows that humans are unable to identify their own biases. And since humans create algorithms, bias blind-spots will multiply unless we create systems to shine a light, gauge risks, and systematically eliminate them.

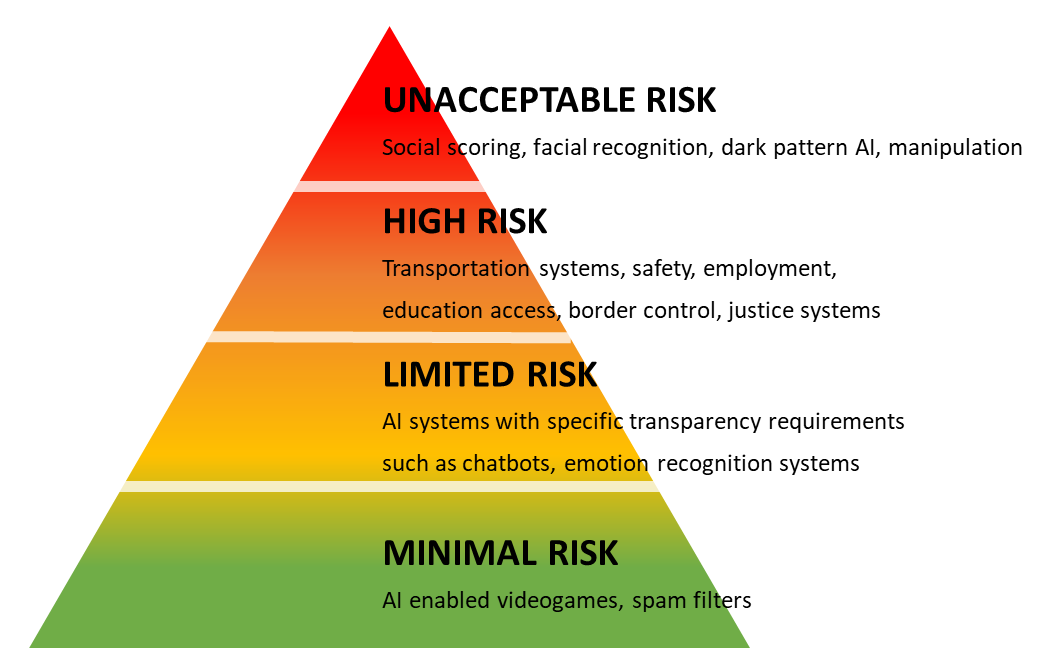

The European Union tasked a team of AI professionals to define a framework to help characterize AI risk and bias. EU Artificial Intelligence Act (EU AIA) is intended to form a blueprint for human agency and oversight of AI, including guidelines for robustness, privacy, transparency, diversity, well-being, and accountability.

What are their recommendations and how do they benefit your business? And how can technology help add wind in the sails of AI adoption? The seven steps they recommend, and the concrete actions on how to fulfill them below, are a great place to start. But first, let’s review their “algorithmic risk triangle,” which characterizes risk on a scale from minimal to unacceptable.

What Kind of Bias is Acceptable?

As Lori Witzel explains in 5 Things You Must Know Now About the Coming EU AI Regulation, the EU Artificial Intelligence Act (EU AIA) defines four levels of risk in terms of their potential harm to society, and therefore important to address. For example, the risk of AI used in video games and email spam filters pales in comparison to its use in social scoring, facial recognition, and dark pattern AI.

Their framework aids understanding but is not prescriptive about what to do about it. The team does present seven key principles of AI trustworthiness, which this article uses as guide rails for an action plan for algorithmic bias mitigation:

Principle #1: Human agency and oversight. The EU AIA team said, “AI systems should empower human beings, allowing them to make informed decisions and fostering their fundamental rights. At the same time, proper oversight mechanisms are needed through human-in-the-loop, human-on-the-loop, and human-in-command approaches.”

What they don’t prescribe is how.

Human-in-the-loop systems are facilitated by what Gartner called Model Operationalization, or ModelOps. ModelOps tools are like an operating system to algorithms. They manage the process helping algorithms travel the last mile to use by the business.

ModelOps tools provide human-in-command tools to ensure humans have agency and oversight into how algorithms perform, their historical performance, and their potential bias.

Model analytics in ModelOps tools help humans peer inside an algorithm’s judgments, decisions and predictions. By analyzing algorithm metadata (data about AI data), human observers see, in real-time, what algorithms are doing.

The dashboard below compares the actions of two algorithms and the factors involved in their predictions for a banking algorithm that assesses loan risk. The algorithm metadata for the “champion” model is purple, and a proposed “challenger” model is red.

On the top left, we see that the challenger model is “more accurate.” But at what cost?

Lower right, the bar shows how these two algorithms arrive at their decisions. We can see at a glance that the production algorithm weights prior loan delinquency and payment history more than the challenger. We also see that the challenger model more heavily considers the size of the loan, how much savings the requester has, and credit history.

Is this a fair and balanced assessment of risk? Does the measure of account balance skew bias toward economically advantaged borrowers? Age is less dominant a factor in the challenger model; is that good? Analytics like this give humans the agency and oversight to ask and answer these questions for themselves.

Principle #2: Technical robustness and safety: The EU AIA team explains, “AI systems need to be resilient and secure. They need to be safe, have a fallback plan when something goes wrong, and be reliable and reproducible. That is the only way to ensure that unintentional harm can be minimized and prevented.”

AI is deployed in many forms, and each deployment should include the latest security, authentication, code-signing, and scalable capabilities that any enterprise-class technology typically includes. These include but are not limited to two-factor sign-in, security models, and robust DevOps deployment paradigms.

www.techno-sapien.com

The type of safety needed depends on where the deployment happens. For example, an algorithm deployed to a wearable device must itself be secured for the algorithm to be similarly secure. An effective AI deployment plan applies all these technologies and their management.

Principle #3: Privacy and data governance. The EU AI group warns, “AI systems must also ensure adequate data governance mechanisms, taking into account the quality and integrity of the data and ensuring legitimized access to data.”

Agile data fabrics provide secure access to data in any corporate silo to secure data and evaluate the results of the actions taken or recommended by AI models. This data fabric must adhere to privacy and data protection standards found in regulations such as General Data Protection Regulation (GDPR) in the EU and the California Consumer Privacy Monitor (CCPA) in California, ISO standards for best practice software development for software vendors.

Agile data fabrics help ensure algorithms power trusted risk and bias observations, with proper security and protections over data.

Modern data management, data quality, and data governance tooling help facilitate these requirements and must be incorporated when considering your AI Blueprint implementation. For more on developments of agile data fabrics, read Your Next Enterprise Data Fabric on techno-sapien.com.

Principle #4: Transparency: The EU AI team advises that data, systems, and AI business models be transparent and that traceability mechanisms help achieve this. Moreover, AI systems and their decisions should be explained in a manner adapted to the stakeholder concerned. Finally, the EU AI advises humans to be aware that they’re interacting with an AI system and must be informed of its capabilities and limitations.

www.techno-sapien.com

Transparency is a blend of proper disclosure, documentation, and technology. Technologically, data fabrics and model operationalization tools track and expose data transparency through changelogs and history to trace and playback the actions of AI models. This data and model traceability, combined with proper disclosures and documentation, help make the data used, decisions made, and the implications of those decisions more transparent across the entire organization and to customers and partners.

Principle #5: Diversity, non-discrimination, and fairness: Unfair bias must be avoided because it could have multiple negative implications, from the marginalization of vulnerable groups to the exacerbation of prejudice and discrimination. The EU AI team suggests that to foster diversity, AI systems should be accessible to all, regardless of any disability, and involve relevant stakeholders throughout their entire life circle.

Bias mitigation is a robust field of research. As Nobel Prize-winning psychologist and economist Daniel Kahneman explains in Noise, reducing bias is hard. But the solution is hidden in plain sight, or even on some TV game shows: ask a friend. That is, have someone else identify it for you! Kahneman calls these “friends” Decision Observers. Systems, process, and cultural thinking are paramount on the topic of algorithmic bias mitigation.

Bias mitigation is hard. One way of facilitating it is to ask other people to assess it. In Noise, Daniel Kahneman calls these people Decision Observers, and AI risk migration tools help facilitate that collaboration.

The collaborative aspects of agile data fabrics and AI model operationalization tools help provide teamwork surrounding AI bias analysis, collaboration, and mitigation and are therefore essential tools to consider when implementing the AI EU Blueprint.

Principle #6: Societal and environmental well-being: AI systems should benefit all humans, including future generations. Firms using AI should consider the environment, including other living beings, and their social and societal impact. Adoption of the EU AI’s principles helps form a well-considered AI culture, an essential step forward in pursuing this well-being.

Principle #7: Accountability: Uh-oh! Here comes the regulation! Maybe. The EU approach of providing guidelines and principles is a constructive alternative to heavy-handed regulation often found in, for example, the United States. The EU AI team suggests self-guided mechanisms to ensure responsibility and accountability for AI systems and their outcomes.

The subject of regulation, oversight, and accountability for AI ethics is massive. Modern data fabric and Model Operationalization tools are the technological foundation of a new AI culture. They promise to raise awareness of algorithmic risk and bias, and in so doing, raise the accountability bar.

Algorithmic Risk and Bias Mitigation in Action in the Insurance Industry

The Automobile Association of Ireland specializes in home, motor, and travel insurance and provides emergency rescue for people in their homes and on the road, attending to over 140,000 car breakdowns every year, 80% of which are fixed on the spot.

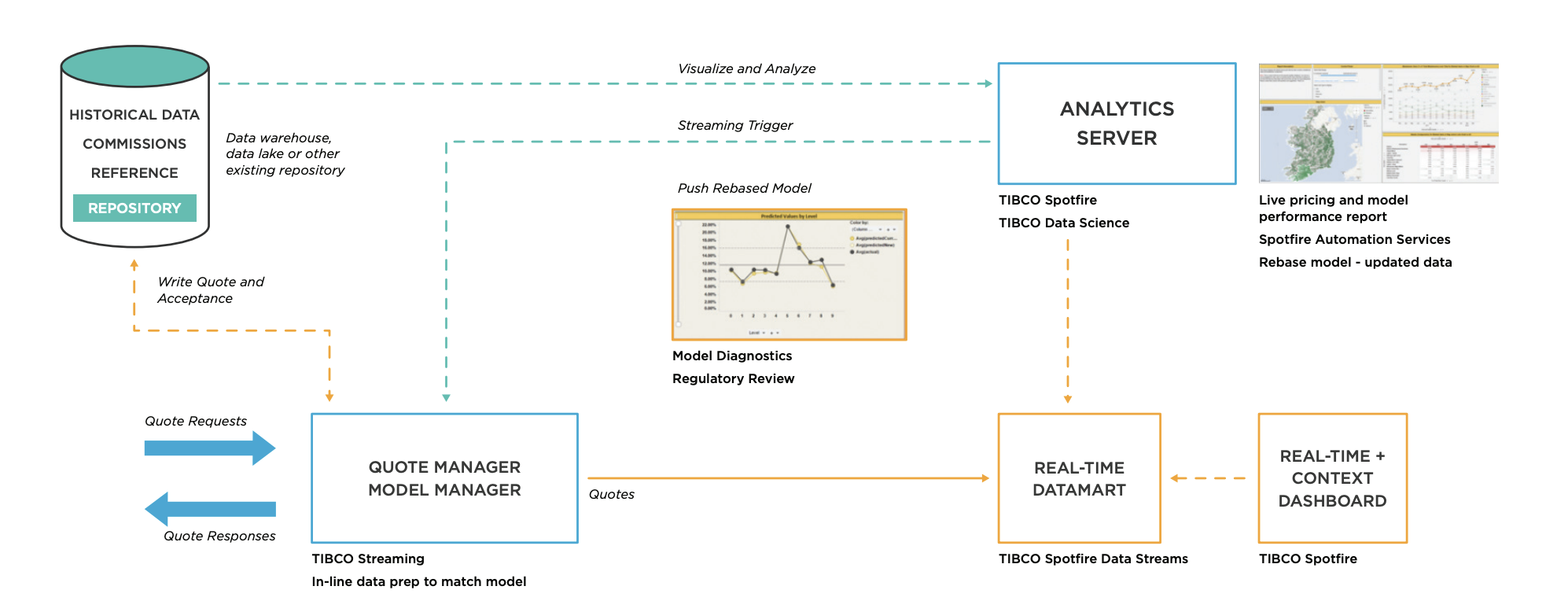

AA Ireland builds algorithms to “do things like fraud identification and embedded customer value and then update those models in live environments.”

This dashboard shows model performance and diagnostics assessment for rebasing: Predictions for particular variables of interest to the business.

Colm Carey, chief analytics officer at AA Ireland, explains, “Insurance has always had predictive models, but we would build something, and in three months, update it. Data comes in and goes out to models seamlessly without disruption, basically providing real-time predictability. Rather than just predicting, it’s ‘If I increase or decrease discounts, what’s the uplift in volume and profitability?’

These principles help the business be able to ask: What should I do differently? How should my algorithms change?

“You can understand the total opportunities and risks in the market and make informed decisions. And then, you can ask: What should I do differently? How should my pricing change? How should I facilitate that in the call center? You can understand it all — plus segmentation, fraud modeling, and underwriter profit. We’re going to use it for long-term predictability of call center capacity and CRM modeling, campaigns, and return of investment from them, and how to price a product.”

The result of this system creates precisely what the EU AI team suggests: human-in-command tools, real-time assessment, and adjustment, of algorithmic behavior.

The system works by absorbing historical data, commissions, and reference data (top left). Data and models are operationalized to the analytics / ModelOps server (top right), for real-time scoring from requests for quotes from clients (bottom left).

For a detailed case study on the application architecture and more visualizations, read the AA Ireland case study here.

The AI Trust Blueprint: Helping AI Benefit Everyone

The ambition of the EU AI group is to help AI benefit all human beings, including future generations. But, as with any technology, its use requires careful consideration and cultural change. Their blueprint, the seven principles they offer, and these actions can help.

The EU AI report strikes a needed balance between human agency and technology. Combined, they add wind to AI’s sails and ensure we’re sailing in a more just and fair direction as we explore artificial intelligence’s exciting possibilities.

Explore These Resources Mentioned in this Article, for More Information

What is Bias? by Cassie Kozyrkov in Towards Data Science

Excellent summary of the EU AI Act by Eve Gaumond: Artificial Intelligence Act: What Is the European Approach for AI?

Full text for the EU Artificial Intelligence Act: Laying Down Harmonized Rules on Artificial Intelligence (Artificial Intelligence Act) and Amending Certain Union Legislative Acts

On Model Operationalization technology: Techno-Sapien on ModelOps

On Data Fabric Technology: Techno-Sapien on Data Fabric

The Business Implications of the EU AI Act: Lori Witzel on 5 Things You Must Know Now About the Coming EU AI Regulation

Feeling and Thinking, by R. B. Zajonc, on human affect and decision-making.

Noise, by Daniel Kahneman, on Bias and Decision Observer teams.

Closed Loop Continuous Learning Case Study: Dynamic Online Pricing at AA Ireland, TIBCO Software

Note: TIBCO has filed a patent application that covers certain aspects of what we’ve described here.