Seven Mistakes You’re Making As You Build a Data Science Team

The common thread of effective data science is leadership, culture and collaboration.

www.techno-sapien.com

So you want to do more and better data science. Good for you! But what’s your plan? Hire some data scientists and expect insights to magically appear?

With data science, like many things, hope is not a strategy. There are over a dozen disciplines associated with AI, so where do you start? How do you make data science a cultural norm shared by all? How do you better find and root out algorithmic bias?

Here are seven mistakes to avoid as you build your data science team and what to do about them.

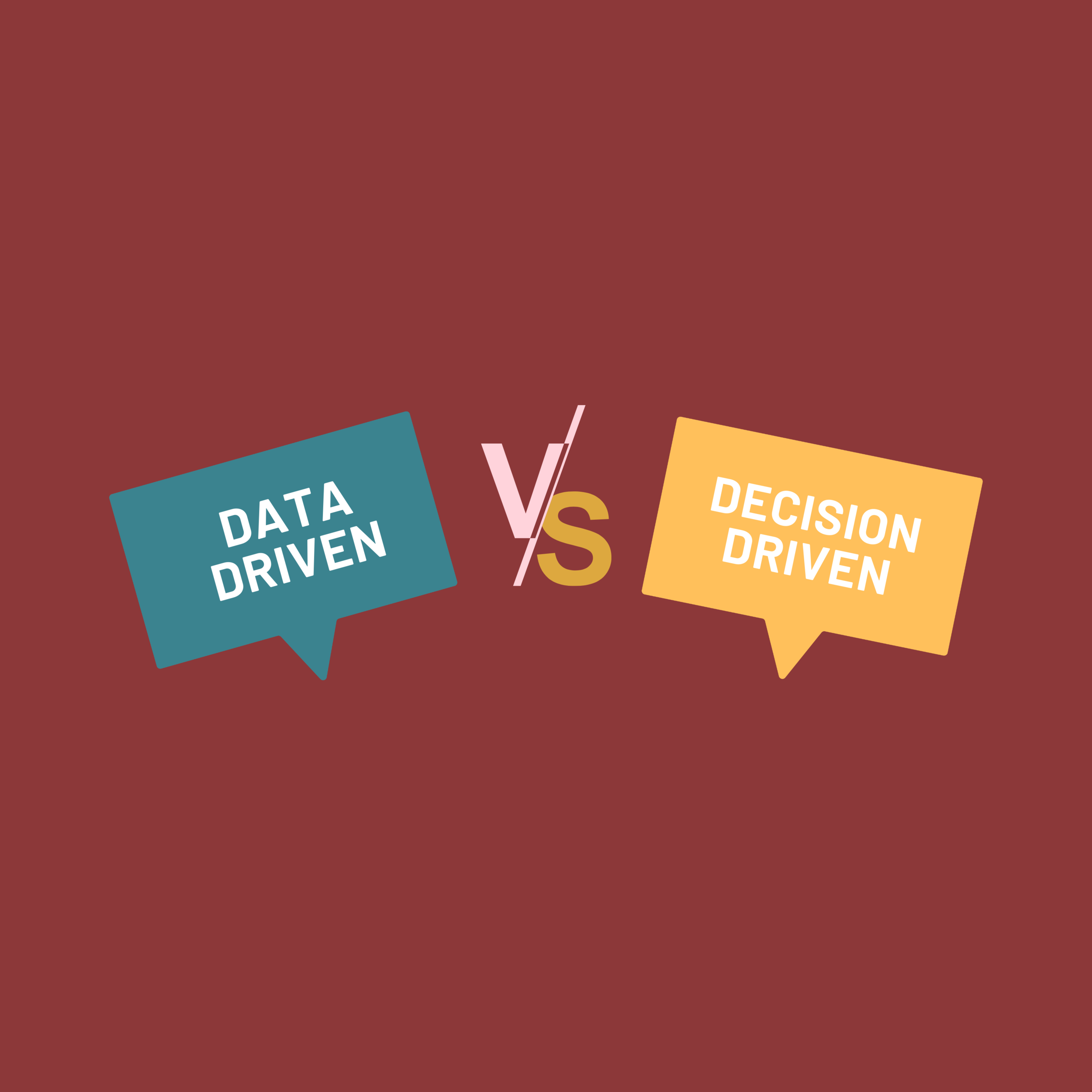

ONE: You strive to be data-driven, not decision-driven. Pablo Picasso said, “the problem with computers is all they can do is provide answers.” His message is profound: don’t let tech lead; lead your tech. Be decision-driven, not data-driven.

Decision-driven and data-driven thinking differ in seven ways:

Decision-driven thinking starts with questions, not data

Decision-makers lead projects, not data scientists

You ponder unknowns more than knowns

You search wide, then deep

You reach for new sources of data

You include risk and bias in your thinking

You look forward, not only in the rearview mirror

Read Be Decision-Driven Not Data-Driven for more.

TWO: You build your team in the wrong order. If you’re staffing data science teams with data scientists first, you might not be approaching AI from the best angle.

In The Top 10 Roles in AI and Data Science, Chief Decision Scientist at Google Cassie Kozyrkov presents a prioritized list of data science roles to hire. The first two are data engineer and decision-maker….

Data scientist doesn’t show up until NUMBER 6.

Cassie suggests you appoint decision-makers first to focus on question-framing. And they’ll need data engineers like an explorer needs a guide. That’s a great duo to start your data science journey with.

THREE: You let data-scientist-inmates run the asylum. Data scientists should NOT lead data science projects; decision-makers should. Algorithms don’t make decisions; humans do.

Kozyrkov explains, “Decision-makers are best placed to frame questions worth answering and understand the potential impact on the business. Look for a deep thinker who doesn’t say, “Whoops, that didn’t even occur to me as I was thinking through this decision.” Decision-makers already thought of it. And that. And that too.”

Imagine you’re an explorer riding an AI horse. Once trained, horses run and listen for commands. Like horses, algorithms do a lot of the work. AI-driven chat-bot responses, driver-assisted car movements, and high-tech manufacturing robotics are autonomic. And steered by humans.

Humans program these algorithms like you train a horse; their decisions are your decisions. And sometimes, they need to be reigned in.

Work with data scientists to train your algorithms well.

FOUR: You don’t employ Decision Observers to assess and act on algorithmic bias and risk. Bias. Everyone has it. Every application and every data set might harbor it. Every citizen wants less of it.

But recognizing bias in oneself is nearly impossible. Cognitive science research (1) confirms that humans are unable to identify their own biases. And since humans create algorithms, blind-spots will multiply unless we create systems to shine a light, gauge risks, and systemically eliminate them.

So, how can we manage what we can’t see?

As Nobel Prize-winning psychologist and economist Daniel Kahneman explains in Noise, reducing bias is hard. But the solution is hidden in plain sight, or even on some TV game shows: ask a friend. That is, have someone else identify it for you! Kahneman calls these “friends” Decision Observers.

As with heads, two Decision Observers are better than one. Lewis had Clark. Indiana Jones had Short Round. So, consider forming teams to assess bias and decide how to act.

FIVE: You don’t balance data scientists with AI architects and statisticians. Many data scientists are terrible computer scientists. That is, they don’t think about how algorithms act in production, which requires software design, performance tuning, testing, and security.

AI architects own the deployment aspects of AI. They carry algorithms that last mile to operational use. They package algorithms for use inside chatbots. Embed predictions in business intelligence tools. Program them for real-time execution in robots.

Statistical analysis is the precursor to modern AI modeling, and some firms neglect true science. Too often, multiple decision-makers look at the same results and reach different conclusions. Statisticians help describe risk and probability even when, on average, a model is useful. For example, how big or likely will bad outcomes be?

So, balance data scientists with statisticians and AI architects to deploy AI more effectively.

SIX: You don't convert domain experts into citizen data scientists. Data science is an art, not only a science. Effective use of AI requires domain expertise.

A citizen data scientist is a domain expert that knows enough data science to put it to practice. While a data scientist might spend 80% doing research and 20% talking with the business, the citizen data scientist spends 80% of the time understanding the impact of algorithms.

Most domain experts are not data scientists, and they don’t have to be. But they do need basic training in data science to understand what it can do. So, train domain experts and analysts on basic data science concepts to create your own citizen data scientists.

Empower citizen data scientists that focus on the meaning of science to the business.

SEVEN: You make data science complicated. Some of the most effective algorithms are the simplest ones. Why Subtraction Might Be the Best Data Science Algorithm in Your Bag explores one of the most innovative periods of trading on Wall Street and an algorithm that required only subtraction.

Jeff McMillan at Morgan Stanley says, “We find that, with data science, the single most powerful driver of client satisfaction is a phone call.”

The common thread of effective data science is humanity

These seven mistakes, and the solutions they offer, have a common thread: humanity. Don't delegate data science to data scientists. Explore the unknown. Spend lots of time posing questions. Treat algorithms as part of your team, and you’ll win!

FOOTNOTE (1)

Daniel Kahneman was awarded the 2002 Nobel Memorial Prize in Economic Sciences (shared with Vernon L. Smith). His latest book, Noise, contributed the concept of Decision Observers for bias identification, and his foundational research with Amos Tversky and others is summarized in the New York Times best-selling book Thinking, Fast and Slow.

Robert (R.B.) Zojonic was ranked by a Review of General Psychology survey in 2002 as the 35th most cited psychologist of the 20th century. Feelings and Thinking, Preferences Need No Inferences, explains that feelings, not data, govern human decision-making.